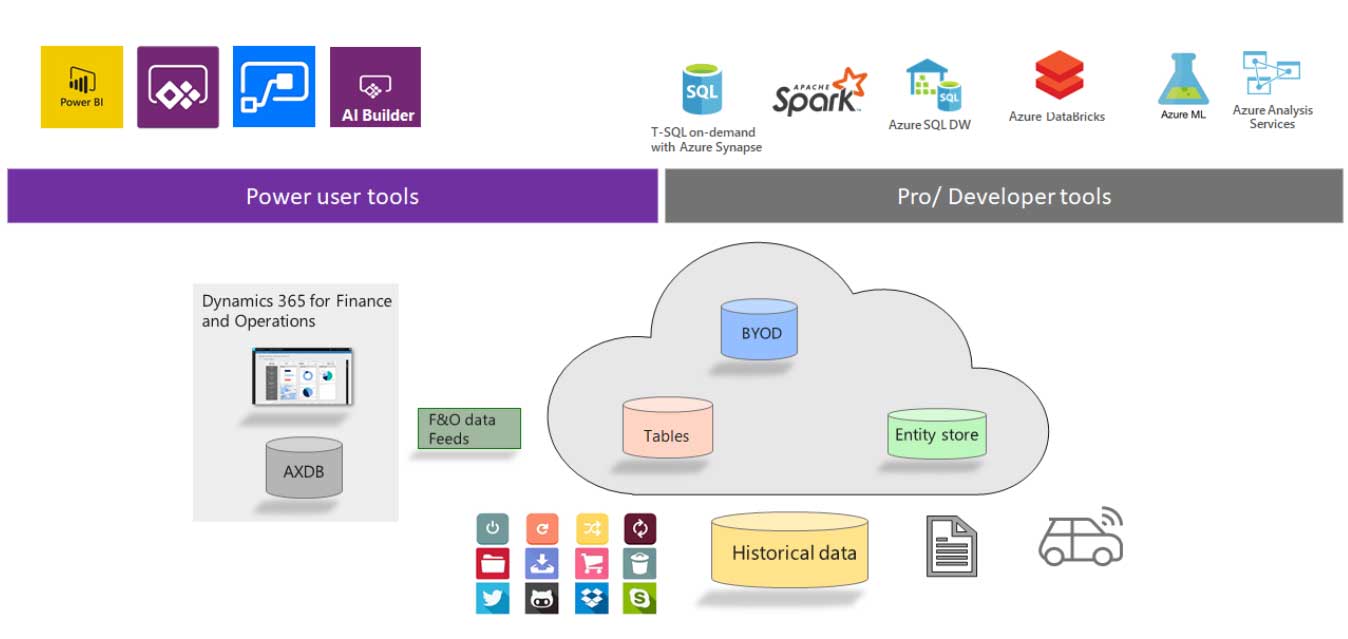

In this post, we will review the integration between D365F&O and Azure data lake.

We will see how to configure and enable the Data lake feature in F&O and how to activate the sync for tables from the UI.

then we will see how the data is stored in Data Lake gen 2 storage and how to access this data from Microsoft Power BI

Note: at this moment Data Lake feature is in preview in certain regions and only available in Tier-2 or higher sandbox environments

Azure Data Lake

Azure Data Lake Storage Gen2 is a set of capabilities dedicated to big data analytics, built on Azure Blob Storage.

Data lakes provide cloud storage that is less expensive than the cloud storage that relational databases provide. Therefore, large amounts of data can be stored in the cloud.

This data includes both business data that is traditionally stored in business systems and data warehouses, device and sensor data, such as signals from devices. In addition, Data Lake supports a range of tools and programming languages that enable large amounts of data to be reported on, queried, and transformed.

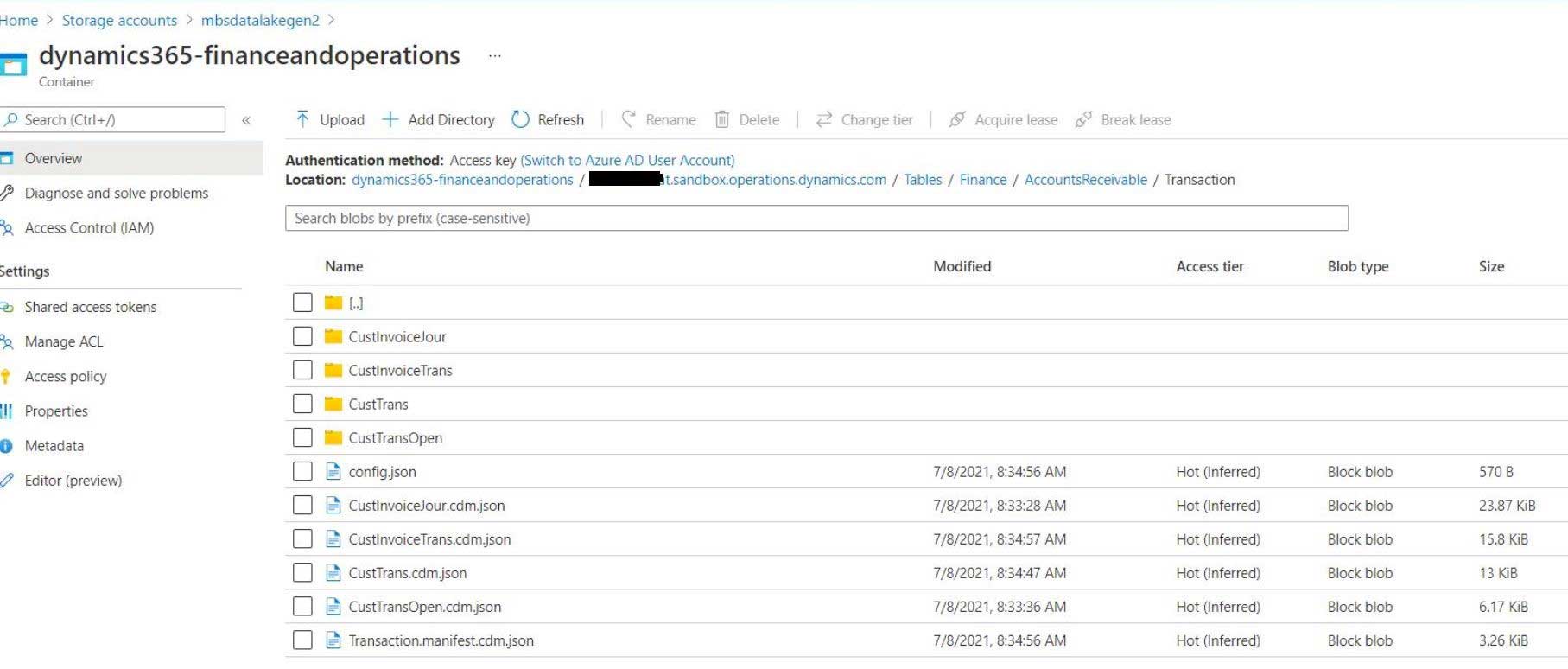

Data is stored in Data Lake to comply with the Common Data Model folder standard with the addition of a hierarchical namespace to Blob storage.

The hierarchical namespace organizes objects/files into a hierarchy of directories for efficient data access. A common object store naming convention uses slashes in the name to mimic a hierarchical directory structure.

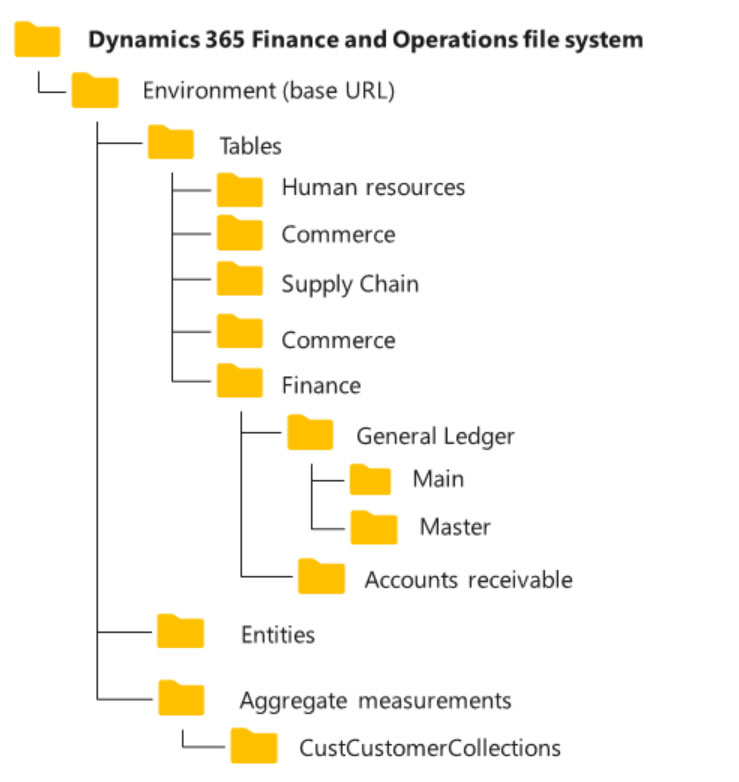

The following illustration shows the Common Data Model folder structure from Finance and Operations apps

Integration with Azure Data Lake for D365F&O is delivered in the form of an add-in installable from LCS power platform integration.

The Export to Azure Data Lake feature is based on CDC and a microservice that exports Finance and Operations app data to Azure Data Lake and keeps the data fresh

Currently only available in cloud-based Tier-2 or higher sandbox environments

Azure Data Lake Storage Gen2 Introduction | Microsoft Docs

Azure Data Lake overview – Finance & Operations | Dynamics 365 | Microsoft Docs

Change data in Azure Data Lake – Finance & Operations | Dynamics 365 | Microsoft Docs

Pre-requisites

Before install the feature we need to configure a set of Azure resources required when we install the add-in in LCS.

below are the required components that we need to setup.

1. Service Principal for Microsoft Dynamics ERP Microservices

2. Create an application in Azure Active Directory

3. Create a Data Lake Storage (Gen2 account) in your subscription

4. Grant access control roles to applications

5. Create a key vault

6. Add secrets to the key vault

7. Authorize the application to read secrets in the key vault

I will not review this steps in detail as they are well explained in the below link.

Configure export to Azure Data Lake – Finance & Operations | Dynamics 365 | Microsoft Docs

follow the steps described in the link above to configure the required Azure resources to allow us install the feature.

Power Platform integration

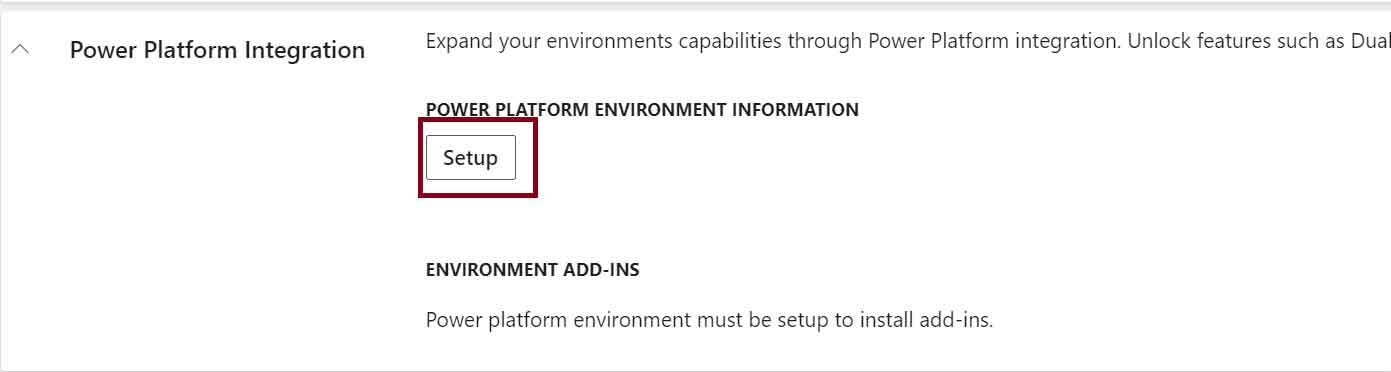

In order to install the feature Add-In, we need to enable Power Platform integration for our F&O environment in LCS.

in Tier-2 or higher sandbox environment, navigate to the environment page in LCS and locate the power platform integration section.

Use the Setup option to start the process to deploy a power apps environment.

Install Data Lake add-in

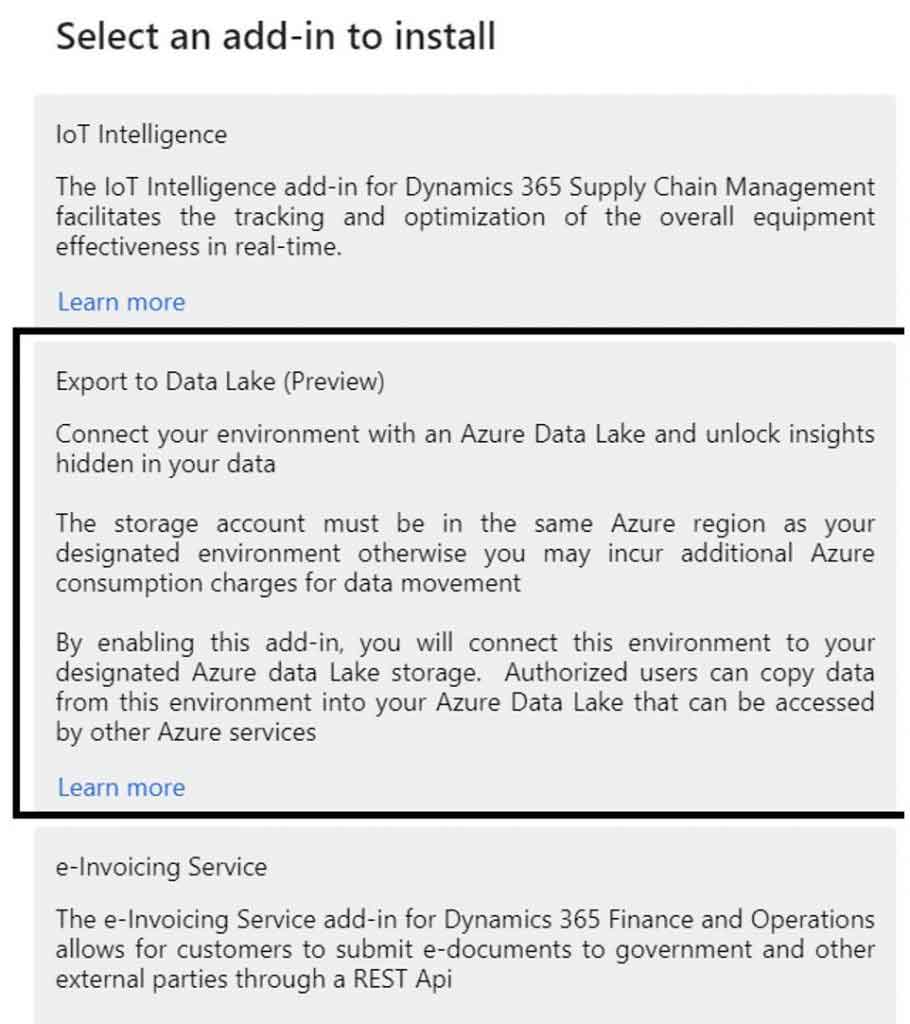

As mentioned previously, the Data Lake feature is installed in the form of an add-in.

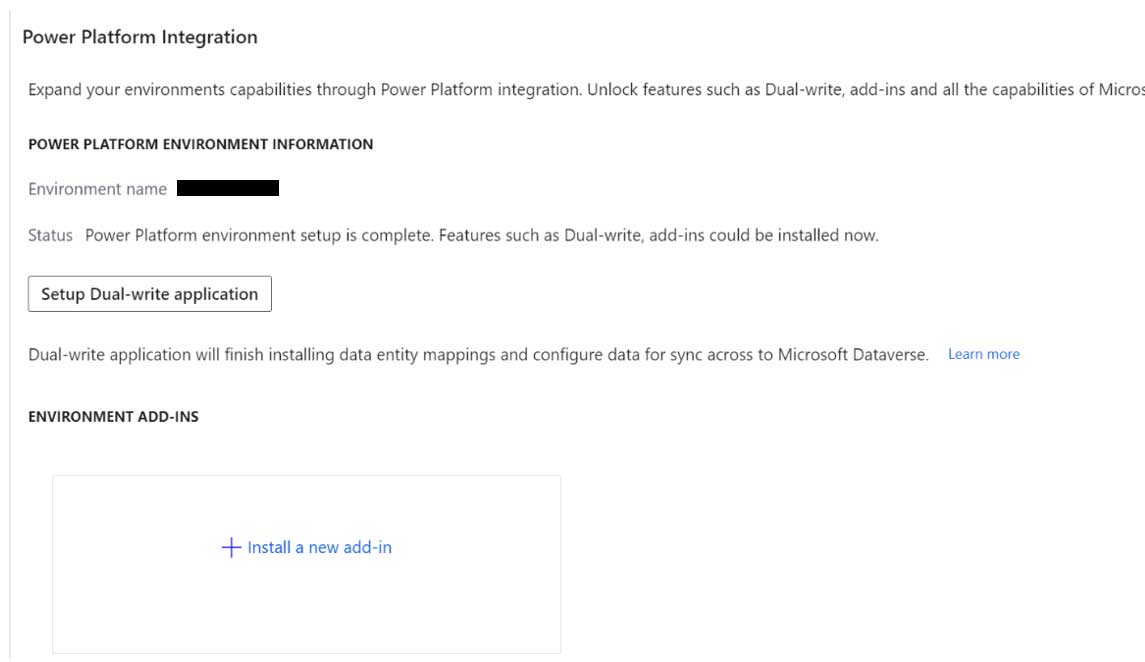

Select the option Install a new add-in

Among the different add-ins available we will find Export to Data Lake, select this option

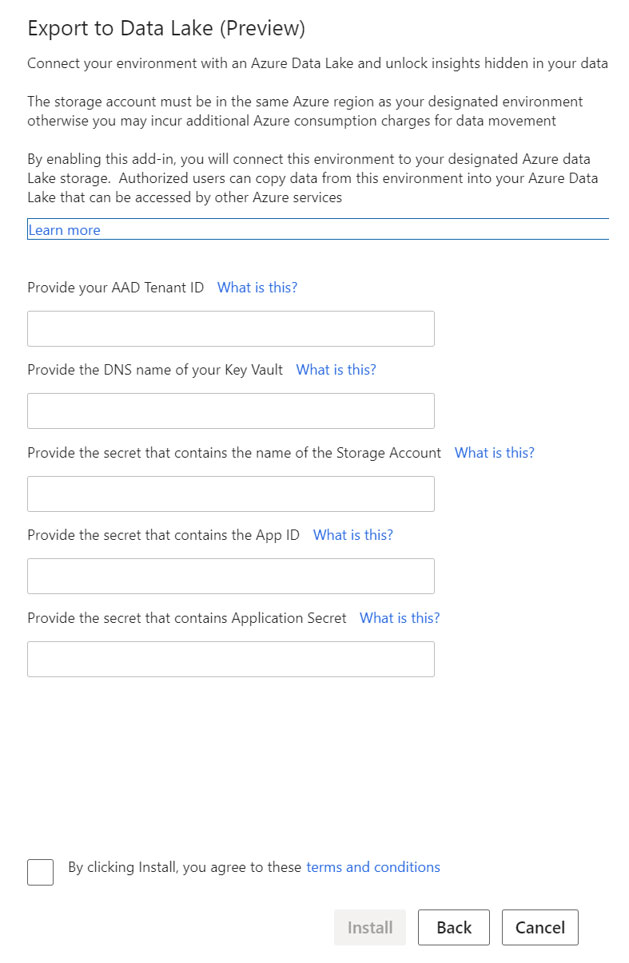

the screen below will pop up requesting the required details that we will have after performing all the steps in the pre-requisites

Configure export to Azure Data Lake – Finance & Operations | Dynamics 365 | Microsoft Docs

Fill in the required details and install

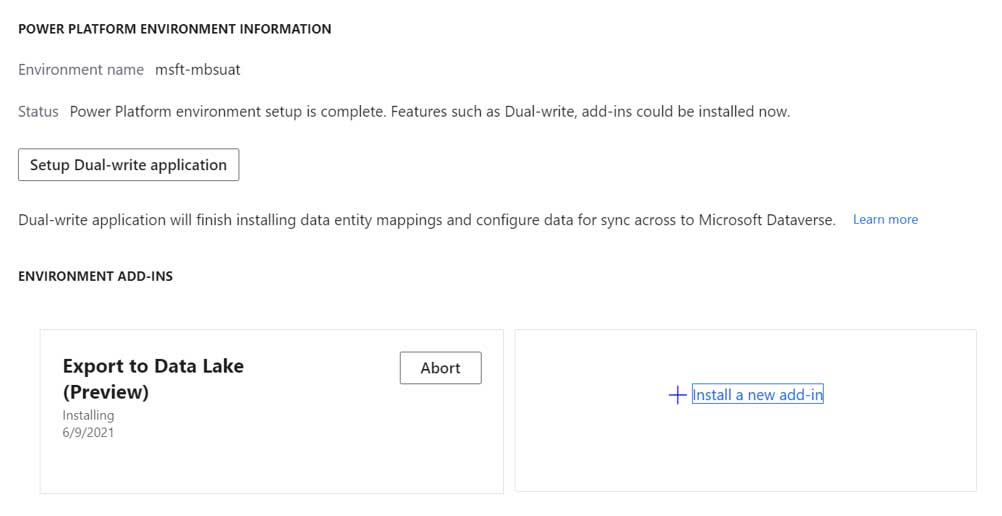

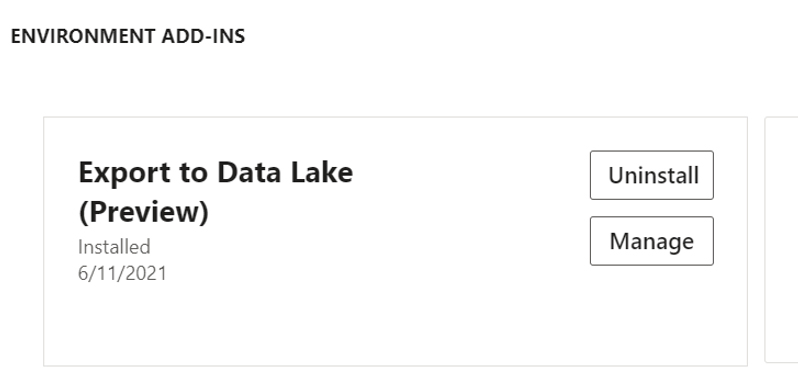

If we added all the required configurations we should see the pop-up below

Once the add-in is installed we will see it in the installed add-ins section

Finance and Operations apps data in Azure Data Lake – Finance & Operations | Dynamics 365 | Microsoft Docs

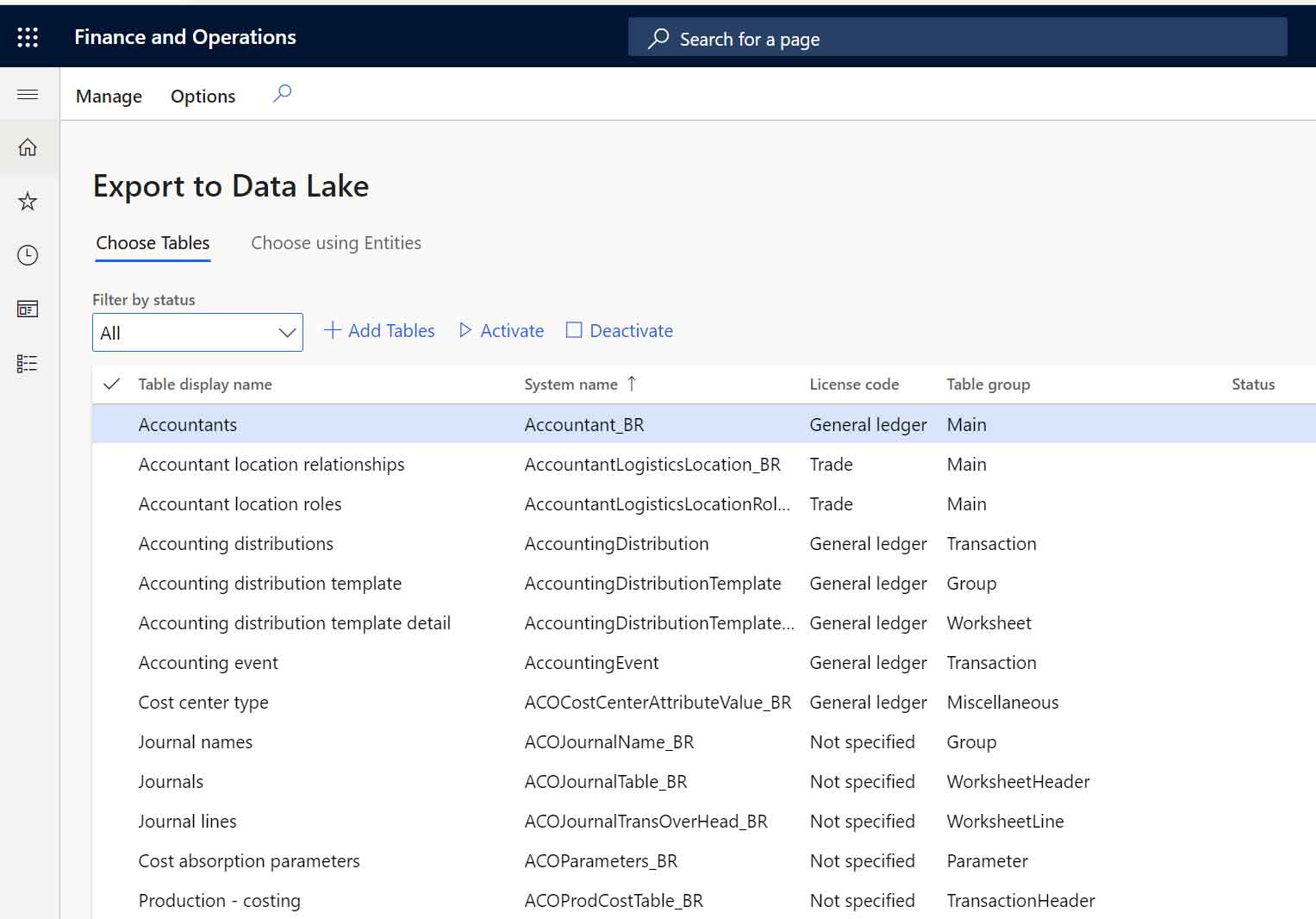

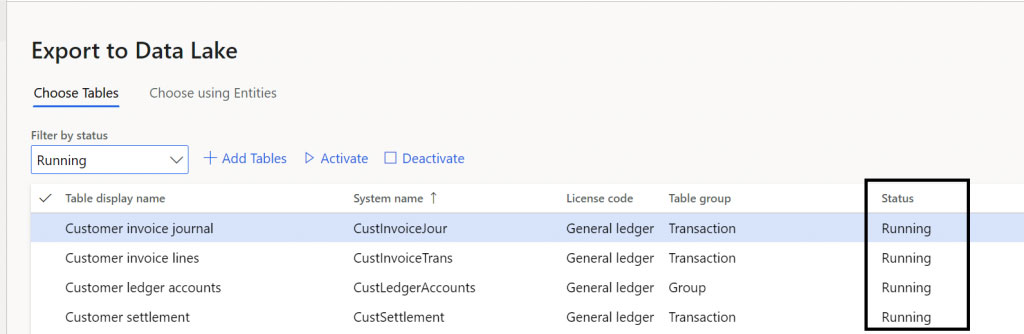

&mi=DataFeedsDefinitionWorkspace

Select the table to publish and use the Activate option.

Entities will change status to Initializing and then Running or Error.

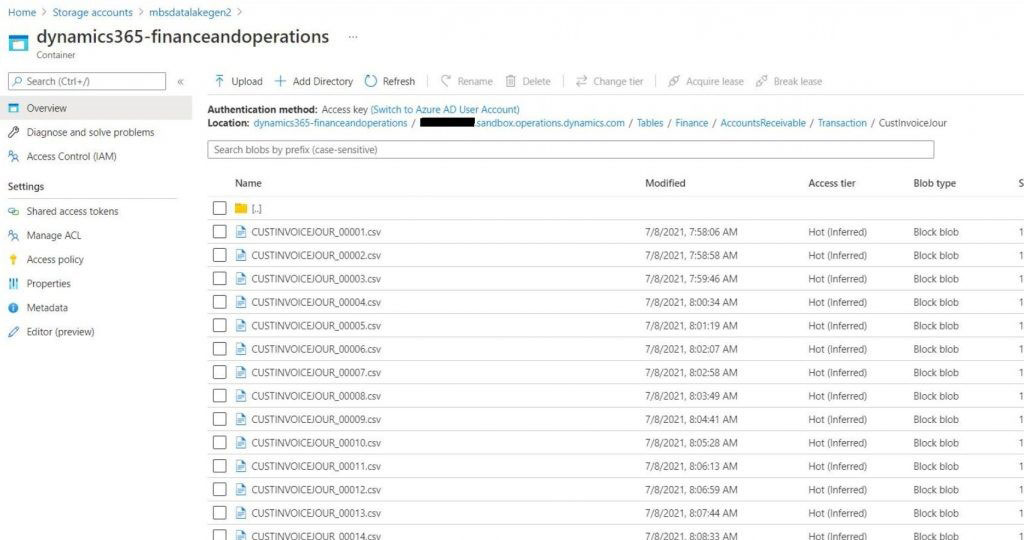

Working with data in Data Lake

Once the data is published we can leverage the multiple tools to explore and analyze the data from power user tools like Power BI or Power apps, to azure components like Synapse, Data bricks and others

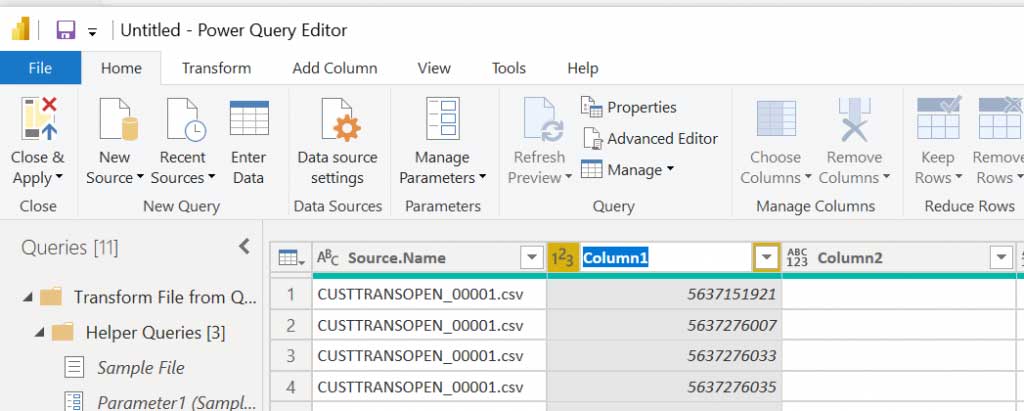

Below we will see the steps to access this data from MS Power BI

Power BI from Data Lake

We can build Power BI reports directly consuming the data from Data Lake.

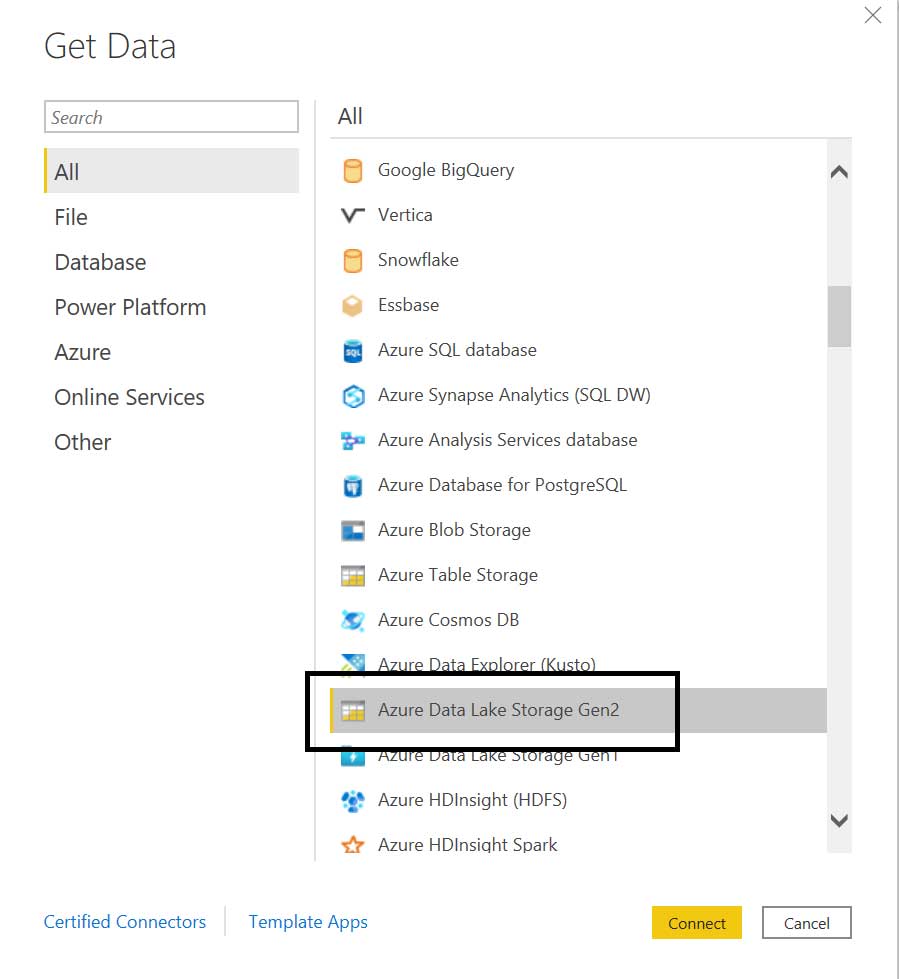

From Power BI desktop, we can select as data source Azure Data Lake Storage Gen 2

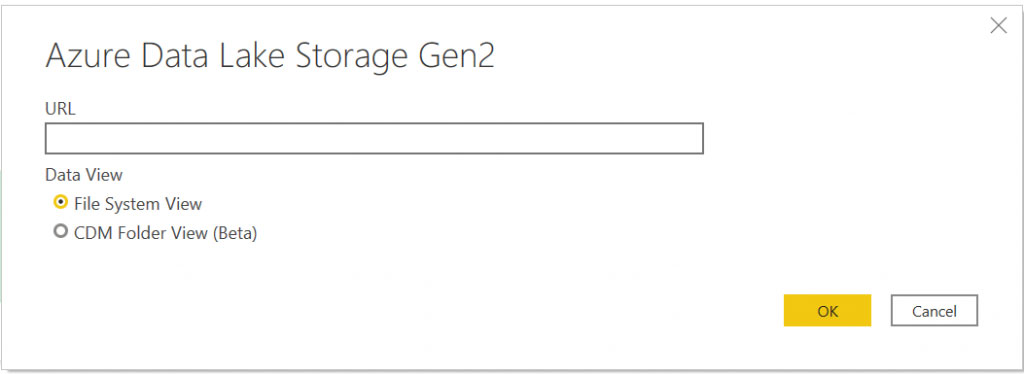

https://<accountname>.dfs.core.windows.net/<filesystemname>/<subfolder>

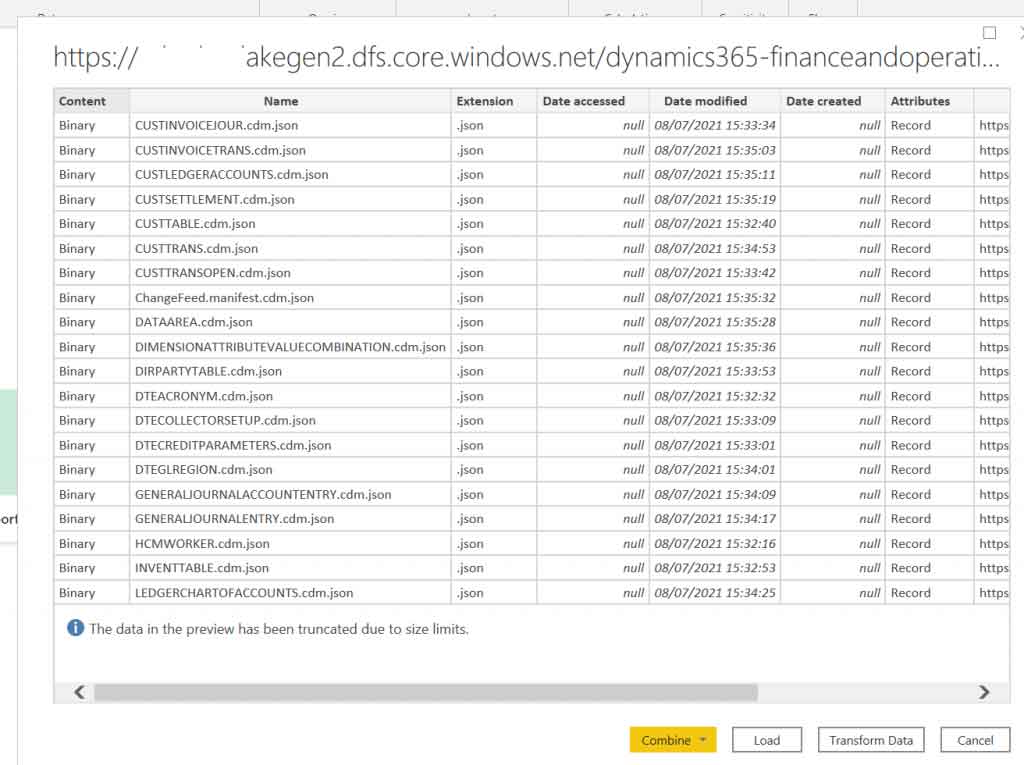

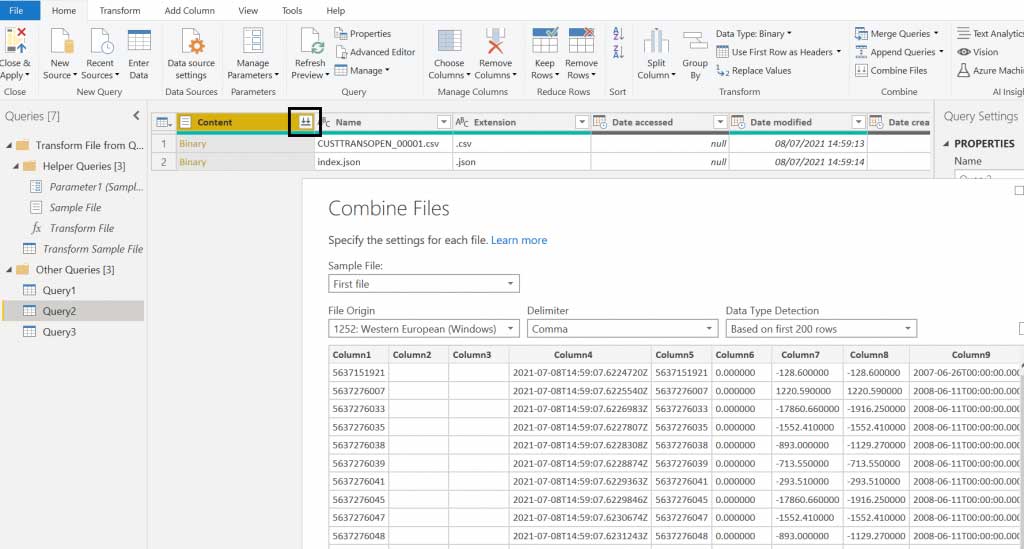

Once connected we will see available data depending on the sub folder informed

We are ready to start and to use the data in our visualizations.

See more details below:

Analyze data in Azure Data Lake Storage Gen2 by using Power BI | Microsoft Docs

Happy DAXing…..!